Key takeaways for you – What is Googlebot in SEO

| – Googlebot is Google’s web crawler that collects information about web pages to keep Google’s database up-to-date. – Googlebot starts by finding and crawling new or updated web pages on the internet by following links, sitemaps, or submitting URLs to be crawled. – After finding the webpage, it downloads all its assets like images, videos, and scripts and analyses the content of the webpage including text, HTML codes, and metadata. – Googlebot indexes the page and adds it to Google Search Index, which is a database of all web pages that the search engine giant has crawled and considers valuable and relevant. – To optimize your website for Googlebot, you can use techniques like Robots.txt, No-follow links, Sitemap, Site speed, Image optimization, and Clean URL structure. – Providing mind-boggling content and excellent SEO along with fabulous user experience can help Googlebot discover and crawl your site more often. |

What if I tell you to sleep an entire night under the sky and finish counting all the stars in it?

Sounds crazy, isn’t it?

Rightly so, we all know that it is a next-to-impossible task.

But Google and other search engines are doing something similar. They go to each website present on the web and collect information about them.

In 2008, Google crawled 1 trillion pages. In 2013, it jumped to 30 trillion pages, and in 2017 the numbers reached 130 trillion.

After seeing these numbers, I am sure you will agree with me that Google is achieving a huge feat.

But how has it made this impossible task possible?

The answer is: with the help of bots.

And the best part is understanding these bots and how they work may help boost your site’s SEO.

Hence, let’s not waste any more time and find out what a Googlebot in SEO is and how it works.

What is Googlebot in SEO?

Every search engine has its bot or web crawler or spider that scrapes data from webpages and Googlebot is Google’s web crawler.

Simply put, crawler or bot is a common term that refers to programme used for automatically discovering and scanning sites by following links from one webpage to another.

Google has a ton of crawlers that it sends to every nook and corner of the web to find pages and check what they contain.

They search and read new and updated content and suggest what needs to be indexed. They gather information about each page and keep Google’s database up-to-date.

What are the different types of Google bots?

Googlebot is an umbrella term used to describe all the bots that Google deploys for crawling. Here are some common Google crawlers you may see in your site’s referrer logs:

- API-Google

- AdsBot Mobile Web Android

- AdsBot Mobile Web

- Adsbot

- AdSense

- Googlebot Image

- Googlebot News

- Googlebot Video

- Googlebot Desktop

- Googlebot Smartphone

- Mobile AdSense

- Mobile Apps Android

- Feedfetcher

- Google Read Aloud

- Duplex on the web

- Google Favicon

- Web Light

- Google StoreBot

- Google Site Verifier

How does Googlebot work?

Let me explain how a Googlebot works in a simple and precise way.

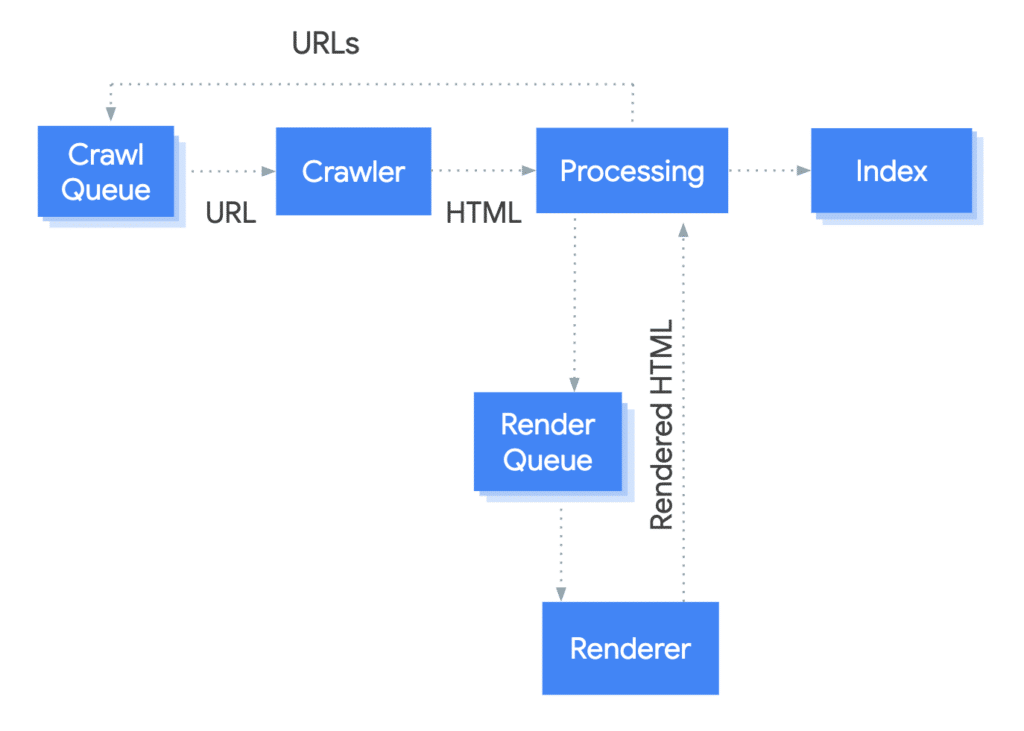

- Googlebot starts by finding and crawling new or updated web pages on the internet. It does this by following links from other web pages, and sitemaps, or by submitting URLs to be crawled.

- After finding the webpage, it downloads all its assets like images, videos, and scripts.

- Then it analyses the content of the webpage including text, HTML codes, and metadata.

- Next, Googlebot indexes the page and adds it to Google Search Index. Google search Index is a database of all web pages that the search engine giant has crawled and considers valuable and relevant.

- Then Google uses complex algorithms to analyze, index, and determine which pages should appear in search results for relevant queries.

- When users enter search queries, google shows those pages that are relevant, authoritative, and gives the best user experience.

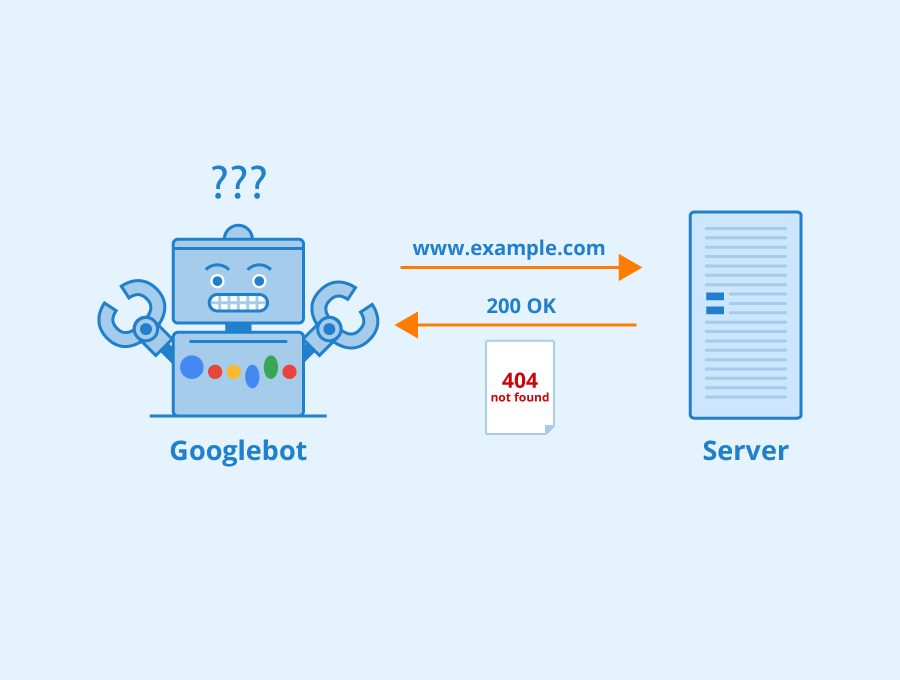

If the bot finds changes in the links and broken links, it will make a note of that, too.

With the passing of time, Googlebot has evolved and now it aims to render a webpage the same manner a user sees it.

How to optimise your site for Googlebot?

I guess you have already got an idea that there are ways to optimise your website for Googlebot. But optimizing it for Googlebot or crawler doesn’t mean you can ignore user experience.

After all, Google also encourages us to make a great website. Though the statement sounds vague, it actually implies that you need to satisfy the users as well as the Googlebot. That’s the only way to get organic traffic.

You don’t need to worry about doing double work here as Googlebot is extremely user-focused and when you will use the right SEO practices your user will be happy too.

Controlling the crawlers and increasing the Googlebot crawl rate for your website will be easier for you with these tips:

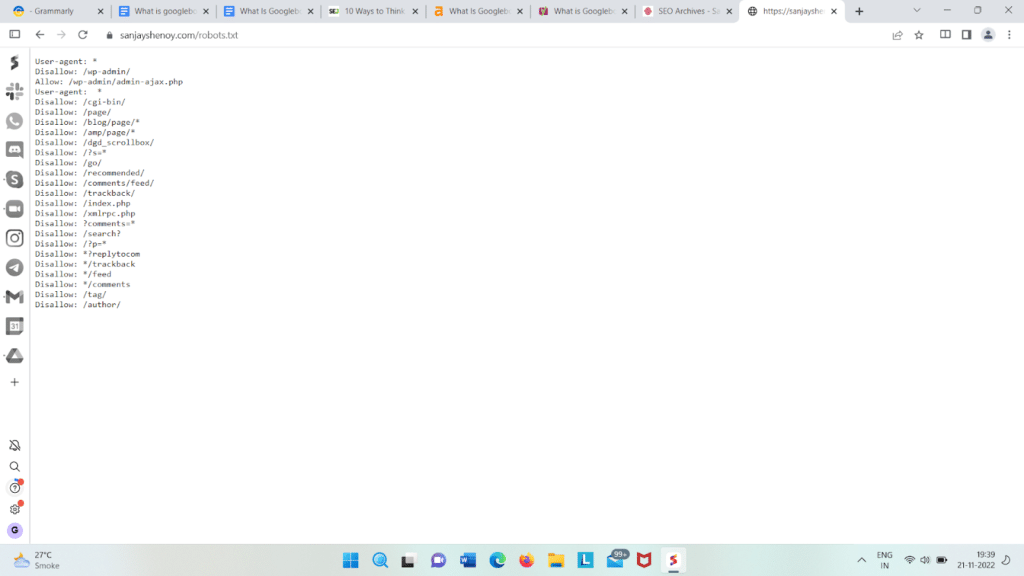

Robots.txt

Robots.txt is a file that you will find in the root directory of a website. You can locate this file at https://www.example.com/robots.txt.

It is one of the first things the crawler looks for while crawling a site. You can tell Googlebot which pages of your site to crawl and which not using this file. Additionally, you can allow/disallow a web crawler/crawlers from crawling a particular page of your site using this file.

Now, Googlebot blocked by robot.txt is a problem that many site owners face.

How can you fix this?

The answer is simple, just go to your robot.txt file and remove the disallow directive. And you are good to go.

No follow links

You can add the rel=”nofollow” attribute to links to tell Google Bots to ignore or not follow those links. But the crawler may not completely ignore it as it is only a hint.

Sitemap

Sitemaps are another way to let Googlebot discover pages on your website. Submit your sitemap to Google using Google Search Console. Check my detailed blog on sitemaps to get an idea about how to optimise them for crawlers.

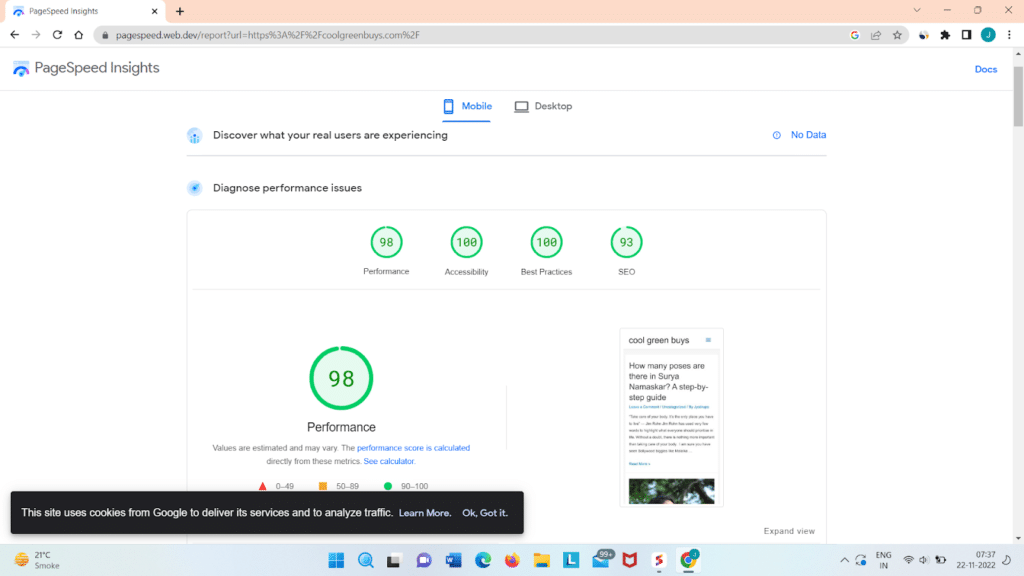

Site speed

Do you know that if your site’s loading speed is very slow, Googlebot may lower your ranking? The site speed has become a ranking factor especially when it comes to mobile devices.

You can check the speed of your website and why it is so slow using Google’s page speed insight.

You can also use GTmetrix which grades your page based on Google Speed Insight and Yslow Criteria.

Image optimization

Google has time and again hinted at the importance of image optimization. Image optimization helps Googlebot understand how the image is making your content more user-friendly.

To optimize the images you can describe the image in the file name using very few words. You can describe the image using a few more words in the alt text. Google also recommends using a separate sitemap for the images to enhance crawlability.

Clean URL structure

It is always better to have a clean and defined URL structure to improve user experience as well as to rank higher.

Googlebot will find it easier to understand the relation of the pages in your website if you set parent pages in URLs.

Here are some examples:

https://example.com/parent-page/child-page/

https://en.wikipedia.org/wiki/Aviation

But Google’s John Mueller advises you to avoid changing the URL if some of your pages are very old and ranking high in SERPs.

Are you sure it’s a real Googlebot and not a malicious one?

This sounds scary, doesn’t it?

Websites try to block many SEO tools and malicious bots and that is why many of these bots pretend to be Googlebot to get access to those sites.

But here’s the deal, Google has made your life much easier by providing a list of public IPs which you can use to verify whether the request is from Google.

Conclusion

To make Googlebot often visit your site, you need to make technically sound choices along with providing mind-boggling content. Excellent SEO and fabulous user experience go hand in hand to make Googlebot not only discover your site but crawl it more often.

I have tried to incorporate different ways you can invite Googlebot to your site and how to optimize your site for Googlebot. Hope I have helped you understand how these bots or crawlers work and how you can think like them to boost your site’s SEO.

In case you still have some questions, shoot them by commenting below.

FAQs

How do I avoid Googlebot?

You can block access of Googlebot to your pages using the following meta tag:

<meta name=”googlebot” content=”noindex, nofollow”>.

What is a Googlebot user agent?

Any software that facilitates renders or retrieves end-users interaction with web content is called a user agent.

If you want to block or allow Googlebot from accessing some of your content, you can simply do that by specifying Googlebot as the user agent. This you can do by going to your website’s robot.txt file.

User-agent: Googlebot

Disallow:

User-agent: Googlebot-Image

Disallow: /personal

What browser does Googlebot use?

Googlebot is nothing but Chrome.

Can Googlebot read images?

Googlebot reads the metadata and images. It checks alt text. But you need to make the alt text description brief but super informative.

Does Googlebot hurt SEO?

It completely depends on the quality of your site. Poor site quality discourages Googlebot from crawling a website, as per John Mueller.

How do I verify my Googlebot?

1. Check whether the domain name is googlebot.com or google.com.

2. Using the host command on the domain name retrieved in step 1, run a forward DNS lookup on the retrieved domain name.

3. Verify whether it is the same as the original accessing IP address from your logs.